- Biweekly Engineering

- Posts

- Evolution of Conversion Attribution at Grab | Biweekly Engineering - Episode 29

Evolution of Conversion Attribution at Grab | Biweekly Engineering - Episode 29

How Grab built a comprehensive conversion attribution platform from scratch

Hello hello dear readers! Happy Tuesday to ya’ll!

Another day, another episode of Biweekly Engineering has arrived in your inbox! Are you excited to have some fun reading interesting engineering blog posts? So am I!

This episode is a bit special for me. Today I feature an article from Grab Engineering which discusses how Grab built an end-to-end comprehensive conversion attribution platform. I was a founding member of the team that built this and a few more systems which were responsible for ads reporting, billing, and conversion attribution. It’s a great feeling to feature our own work! :)

Without further ado, let’s begin.

Clarke Quay in Singapore

How Grab Built Its Conversion Attribution Platform

What is conversion attribution?

The term conversion attribution might not immediately ring a bell for many of my readers - and it is quite expected. The term is quite heavily used in the world of marketing and advertising. Let me explain with an example.

Imagine you are a proud owner of an online business. To attract more and more customers to your carefully crafted handmade products, you have decided to run some ads on Facebook.

As an advertiser and a business owner, you would want clear visibility of the impact the ads have on your business. Advertising is an investment, and it’s important for you to know how much you get in return for your investment.

This is where an advertising platform (in this example, Facebook) needs to show you how impactful your ads are. How could they do it?

Conversion attribution in ads is the process of attributing sales of your products to the ads you have run, which helps to understand how impactful the ads have been in your business.

Is my ad leading to orders for my products?

As you can imagine, answering this question for a large business like Grab comes with its own set of challenges. Fortunately, in today’s article, we get to peek how Grab built and evolved its conversion attribution platform.

Evolution of the platform

That was an unusually long introduction. I wanted to set the context so that the article is more approachable for everyone. Let’s now briefly discuss the evolution of the platform.

Early days

Everything starts small.

The early method of conversion attribution at Grab was through ad hoc queries ran by analysts.

Yes, not very scalable and limited in capabilities.

Kappa architecture

The first end-to-end conversion attribution platform was built using Kappa architecture - an architectural pattern where stream processing is used to process data in real-time. There is also a messaging system with persistence capabilities (like Apache Kafka) which enables reprocessing of historical data.

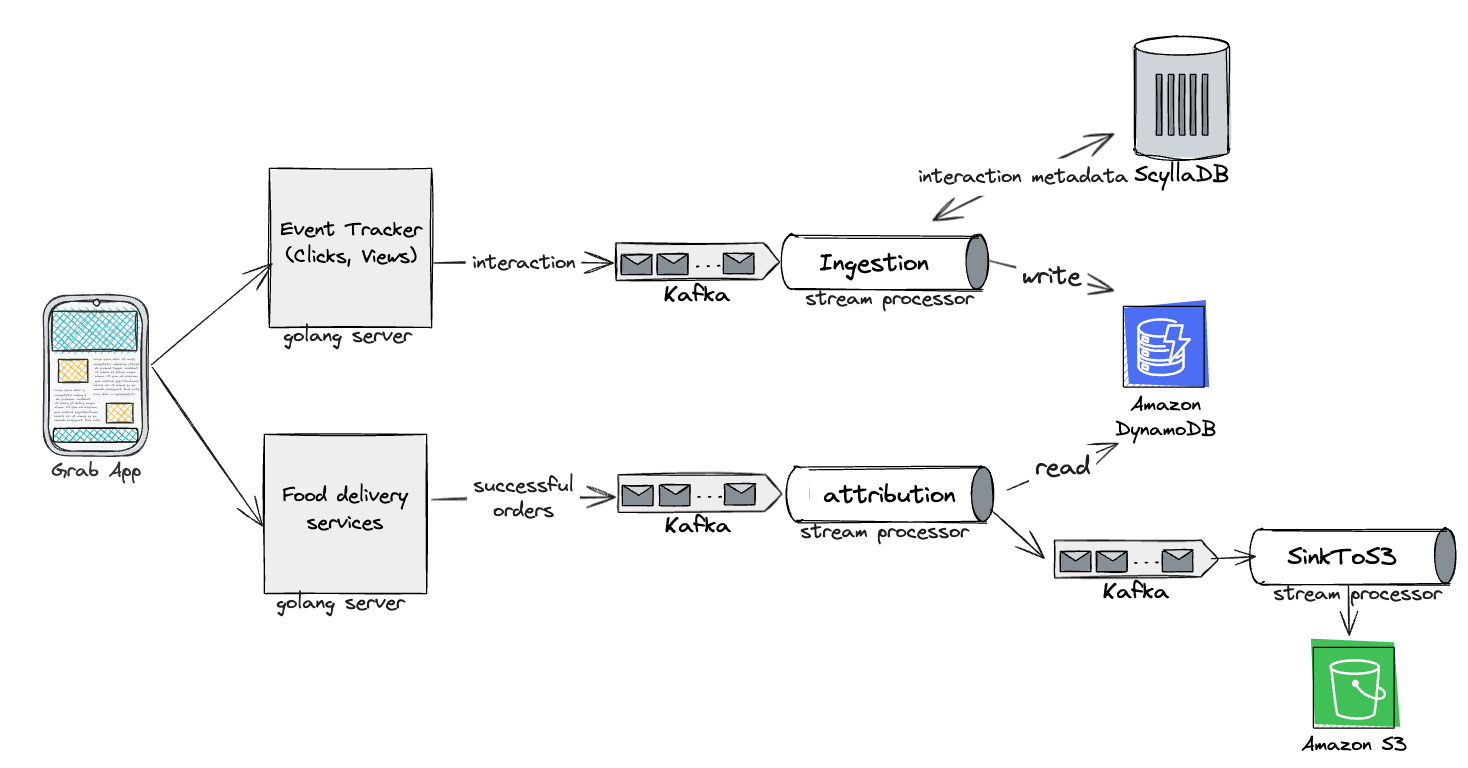

As the diagram shows, ad interaction and successful order events are processed using stream processors on top of Kafka:

Ad events are ingested in DynamoDB using the Ingestion processor

Attribution logic is run in the Attribution processor

In the Attribution processor, for each order event, relevant ad events are read from DynamoDB and consequently conversion events are sinked into Kafka

Conversion events are persisted in AWS S3 for further downstream systems to consume

Lambda architecture

There were challenges related to real-time data processing costs, scalability for longer attribution windows, latency and lag issues, out-of-order events leading to misattribution, and the complexity of implementing multi-touch attribution models.

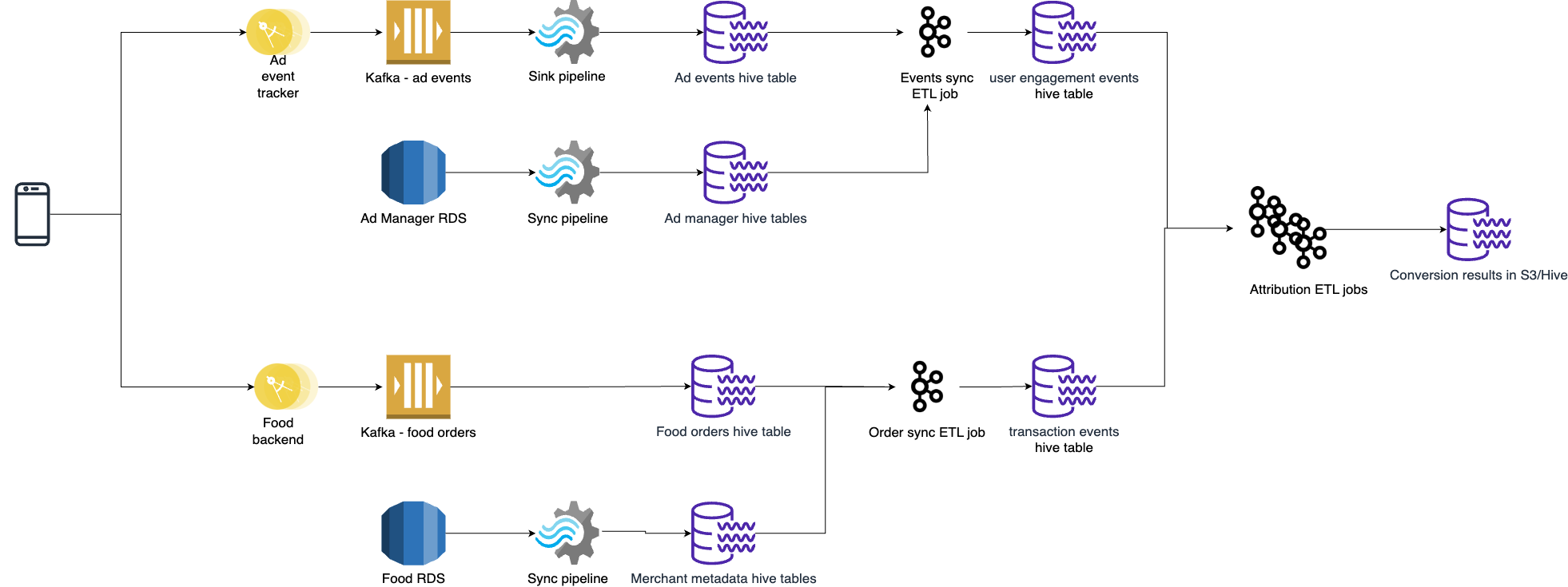

In the next phase of the evolution, a full-fledged attribution platform was built using Lambda architecture.

In Lambda architecture, both batch and stream processing are implemented separately. Yes, as you correctly guessed, it means you now have two different systems to manage.

The diagram above shows the offline platform for conversion attribution. In this flow, there are multiple ETL jobs extracting and processing ad and order events. Eventually, enriched events are stored in new tables which are then read by the attribution ETL pipelines to create conversion results.

Note that Lambda architecture is not a silver bullet. The article explicitly discusses the challenges behind this solution and potential future improvements.

References

And we wrap it up here today. I am sure the article intrigues you to explore more on different architectural patterns and their use cases. Go ahead and figure them out!

See you soon, cheers! 🤝

Reply