- Biweekly Engineering

- Posts

- Generative AI Gateway at Uber | Biweekly Engineering - Episode 33

Generative AI Gateway at Uber | Biweekly Engineering - Episode 33

Uber's innovation with GenAI | How Facebook designed the infrastructure to power up cloud gaming

Welcome to the 33rd episode of Biweekly Engineering! 🙋♂️

Today, we have two fantastic blog posts from two renowned companies: Uber and Meta.

In the first article from Uber, we get introduced to the dynamic landscape of LLM systems. And in the second one, we learn about cloud gaming architecture at Meta.

Sounds fun? Let’s jump in!

The Stonehenge skyline

GenAI Gateway at Uber

Uber has introduced the GenAI Gateway. This is a unified platform developed by its Michelangelo team, which streamlines the integration of Large Language Models (LLMs) across various departments.

As you can expect from the name gateway, the platform offers a consistent interface for accessing models from multiple vendors.

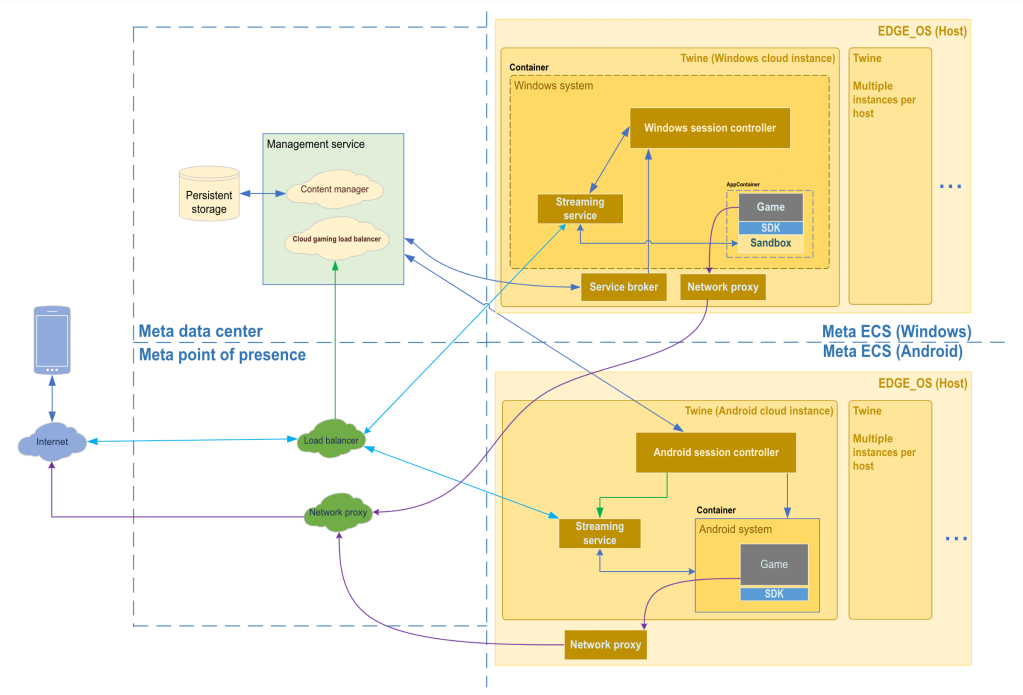

Uber GenAI gateway architecture - from the article

The diagram above shows the architecture of the gateway. It depicts various actors and components in the overall design of the system. Key takeaways from the system are:

The interface for the gateway was mirrored with OpenAI API. This design decision helps in two ways: developers get to use a well-known interface, and the gateway remains aligned with the cutting-edge advancements in the field led by OpenAI.

The gateway is a Go service. Uber’s backend systems are mostly written in Go, and the GenAI gateway is not different. The team didn’t have ready-made suitable Go client implementation available for all the different model vendors. So they had to get their hands dirty.

In addition to its core functionalities, the gateway integrated authentication, authorization, metrics, and audit logging, etc. The existence of these extra components is similar to what you can expect in an API gateway.

At peak, the system serves 25 request per second. The gateway is being widely adopted - around 30 teams were using the system at the time when the article was published.

The article also gives us a glimpse of the challenges faced to build such a system. It includes challenges like handling Personal Identifiable Information (PII), ensuring reasonable latency and quality. Lastly, we get to learn a use-case of GenAI at Uber, and how the gateway is used architecturally.

Meta’s Cloud Gaming Infrastructure

In line with its Metaverse vision, Meta has heavily invested in cloud gaming. The article briefly showcases the core components of Meta’s cloud gaming architecture from a high-level point of view.

What is Cloud Gaming?

Traditionally, a game runs at the device of a user. The higher resource requirement the game has, the higher-end device a user needs to play the game. This is not very accessible. Not everyone can keep up with the increasing resource demands of modern games.

Enter cloud gaming.

In cloud gaming, the game itself runs in the cloud.

The end result? Users don’t need high-end devices anymore!

Cloud gaming is about accessibility — bringing gaming to people regardless of the device they’re using or where they’re located in the world.

The Cloud Gaming Infrastructure

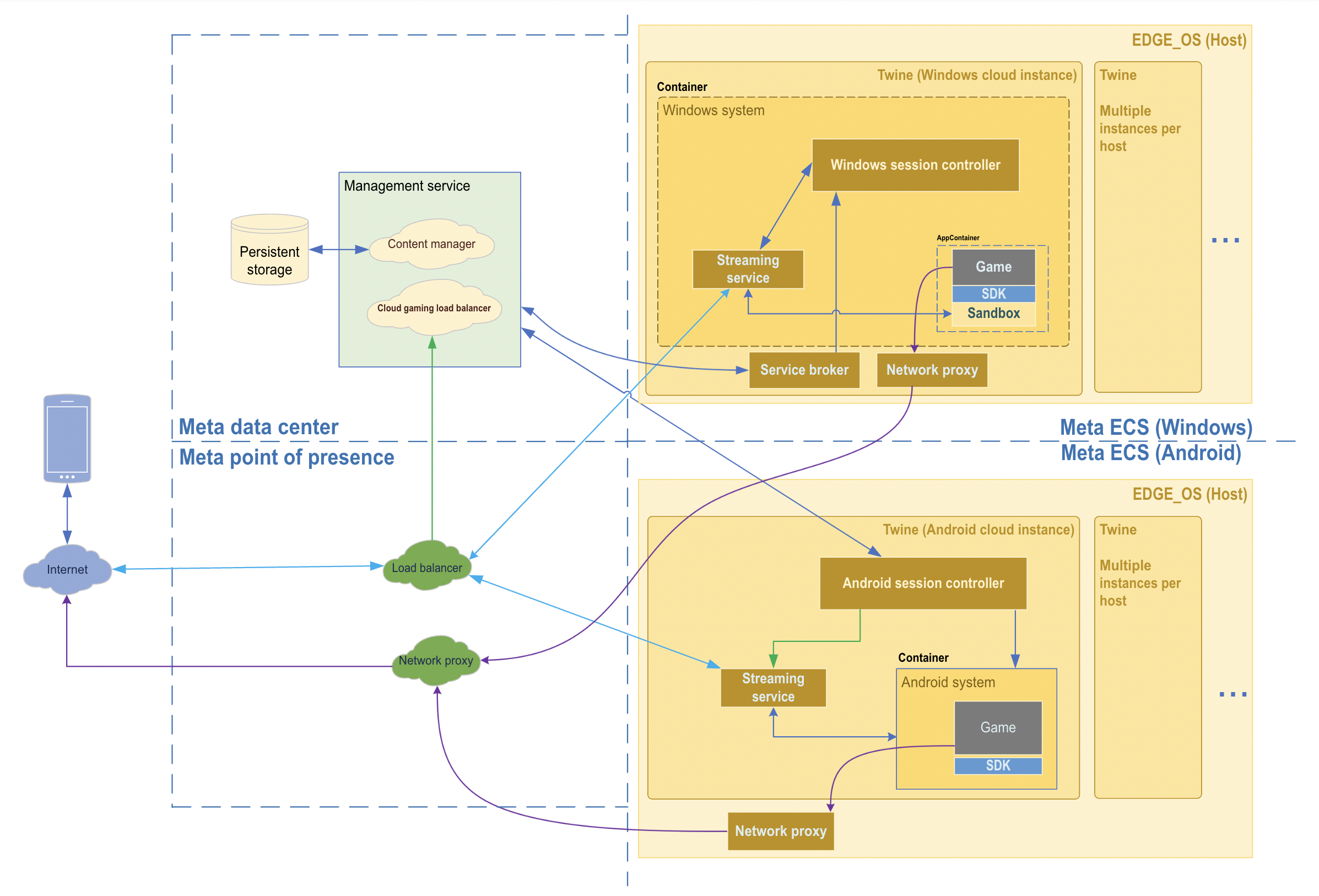

The simplified diagram from the blog

The diagram depicts a high-level design of Meta’s cloud gaming infra. Let’s briefly discuss.

Edge Computing Sites (ECS)

In cloud gaming, the greatest challenge is latency. How to make sure users don’t perceive the fact that each keystroke they are doing is traveling across a network and coming back as video frames?

Think about it. When you run a game on your PC, everything happens inside the device. The speed of the game is bounded by how fast the PC is.

On the other hand, in cloud gaming, there is network involved! Traveling packets through network add to the latency users will perceive. In gaming, seeing jitter while playing is a BIG NO!

This is where edge computing is useful. The idea is to run the game on the edge: closer to the users. The closer a cloud infrastructure to the users, the lower the latency will be caused due to the network. This helps to achieve ultra-low latency.

But remember, there will still be some latency.

As the diagram above shows, ECS is an edge computing site. Each game runs in a virtual environment inside an ECS. User devices interact with an ECS, which interacts with Meta’s data center when required. But the core functionality of running the game happens inside an ECS.

As the article mentions, containerization of the games is supported by Twine, Meta’s in-house cluster management system.

GPU

Modern games are designed for GPUs, so it’s no surprise that these games run on GPUs in an ECS.

Meta partnered with Nvidia to build a massive game-hosting environment in its edge computing sites.

Video and Audio Streaming

The other core part of the system is fast and reliable video and audio streaming. Low-latency is a hard requirement to deliver a smooth gaming experience. Meta adopted WebRTC with SRTP technology to stream user inputs and media.

When a user takes an action in the game, the following happens:

The action is captured as an event and sent to the gaming server. Here, it lands on an ECS.

A frame is rendered in the game due to the action taken by the user. Example: user clicked ‘jump’, so a corresponding frame or set of frames are generated reflecting the jump command.

The frames are encoded using a video encoder and sent back to the user as a UDP packet.

There is a jitter buffer on gamer’s side to smooth out the rendering of the frames.

GPUs are used to encode the video and audio streams.

The article later discusses the shared goals of Metaverse and cloud gaming.

Overall a good read for anyone unfamiliar with cloud gaming.

That’s it for today. I am happy to learn something new from today’s episode myself, and hope you will learn too. 🙂

Until next time, see you!

Reply