- Biweekly Engineering

- Posts

- High Availability Best Practices at Netflix - Biweekly Engineering - Episode 21

High Availability Best Practices at Netflix - Biweekly Engineering - Episode 21

How Netflix ensures high availability at scale - PayPal's scalable Kafka platform

Lo and behold! The 21st episode of Biweekly Engineering is here! How have you been dear readers?

Just like the first 20 episodes, in this 21st one, we will learn something new. Let’s do it!

In the majestic village of Iseltwald, Switzerland beneath the veiled sky

High Availability (and CI/CD) Best Practices at Netflix

Netflix built Spinnaker, a multi-cloud platform for continuous integration and continuous delivery (CI/CD) for software systems. In this article, Netflix shares some tips for building high availability system at scale, which is powered by Spinnaker at the company.

If you are not familiar with the concept of CI/CD, check the article here:

As I was saying, in the article, Netflix shared some ideas that helped them build highly available systems. Note that all these principles are well-supported by Spinnaker. So if you are using Spinnaker for your use-cases, you are getting all these features out of the box.

Let’s quickly summarise the tips and best practices.

Prefer regional deploys over global ones

While deploying your app, don’t go all in at the same time. Deploy region-by-region, and observe the impact of the changes in each region. This gives you the scope to quickly rollback or move traffic from an affected region to a healthy one.

Use Red/Black deployment strategy for production deploys

Use Red/Black (aka Blue/Green) deployment strategy in production. In this strategy, two versions of the system are deployed. Blue is the current version, and Green is the new version. When Green is tested, live, and healthy, switch traffic to Green from Blue. When everything looks good, decommission Blue altogether.

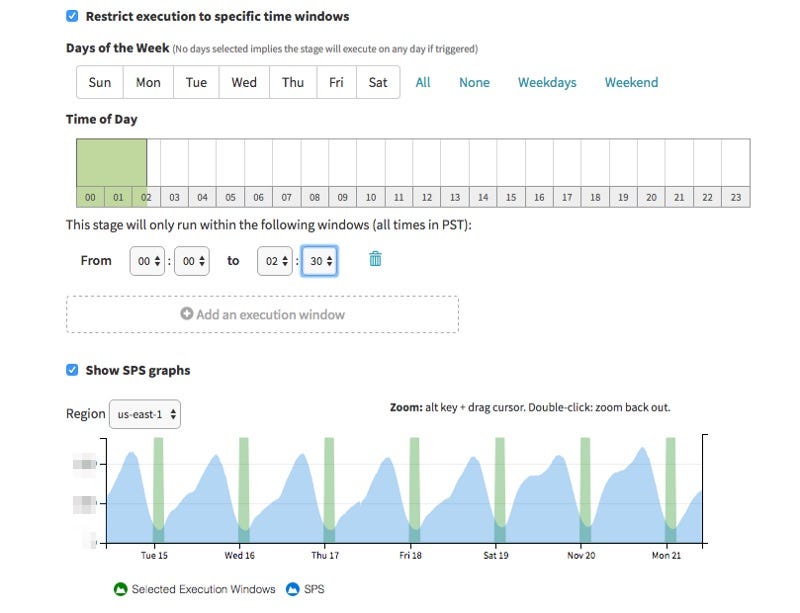

Use deployment windows

Try to deploy during office hours and off-peak times. As a result, if something goes wrong, there will be engineers to respond to and less amount of users will be affected.

Ensure automatically triggered deploys are not executed during off-hours or weekends

If you have automated deployment triggers, consider the schedule carefully. Deployments should not be triggered during out-of-office hours or weekends.

Enable Chaos Monkey

Netflix developed and popularised the idea of Chaos Monkey, a tool that randomly terminates real production nodes to ensure that engineers are building resilient services. To know more, check:

Use (unit, integration, smoke) testing and canary analysis to validate code before it is pushed to production

In your CI/CD pipeline, run unit, integration, and smoke tests. After canary rollout is done, analyse the performance of the deployment. Netflix also built a tool to automatically run this analysis.

Use your judgement about manual intervention

Don’t just rule out manual intervention completely. Sometimes, manual check is useful and easy.

Where possible, deploy exactly what you tested to production

Try to deploy the instance of the app that you tested with. It gives you the highest guarantee that everything is fine.

Regularly review paging settings

If things go wrong, sometimes someone needs to respond to the incident. This is why reviewing paging settings regularly is important. This makes sure that when something is off, right people are alerted quickly enough.

Know how to roll back your deploy quickly

Support seamless rollbacks and know how to do it quickly. If things go wrong somewhere down the line, rolling back the deployment should be straightforward and a common knowledge.

Fail a deployment when instances are not coming up healthy

During a new deployment, instances can go live but still be unhealthy. Make sure unhealthy instances are not sent traffic towards them. Also, if all the instances are unhealthy, the deployment itself should be cancelled.

For automated deployments, notify the team of impending and completed deployments

Notify the owning team of a deployment - whatever happens with it. Keeping them on the same page is important.

Automate non-typical deployment situations rather than doing one-off manual work

Put one-off scripts or manual procedure into a pipeline so that they can be triggered quickly if required any time in the future. For example, in case of emergency, it’s better to have an emergency deployment pipeline instead of a manual procedure. Yes, you won’t require it every day, but doing it when needed should be quick and easy.

Use preconditions to verify expected state

During deployment stages, always check preconditions and run them explicitly. Don’t assume things are fine by default. Preconditions are conditions that must be validated before running a deployment stage. For example, before running canary deployment, the application build and test stages must be completed successfully.

These are the principles discussed in the article. For details, have a read and try to compare them with the standards followed at your workplace.

How PayPal Built a High Scale Kafka Platform

In this recently published article, PayPal gives us a sneak-peak on their Kafka platform. Systems at PayPal ingest trillions of messages per day to the platform. It’s difficult to fathom how scalable it has to be to reach the capability of handling trillions messages in a day!

How did PayPal achieve this tremendous feat? Let’s discuss.

Size of Kafka Platform at PayPal

At PayPal, Kafka is used in a variety of use-cases. Most of these are pretty much common in other companies. Use-cases like first party tracking, health metrics and aggregations, database synchronisation, log aggregation, batch processing, analytics, etc. are frequently spotted in big companies, so they are at PayPal.

The platform looks like the following in numbers:

1500 brokers

20000 topics

85+ Kafka clusters

4-9s of availability

During 2022 retail Friday, the platform processed 1.3 trillion events, which is approximately 21 millions events per second!

Multi-Zone Deployment

As you can expect, the platform is deployed in multiple geographical zones. Each zone has multiple data centres. According to needs, data is replicated across data centres and zones.

Operating Kafka at Scale

To operate such a platform at scale, good amount of investments are required. PayPal approached this in a few key areas:

Cluster management

Monitoring and alerting

Configuration management

Enhancements and automation

Cluster Management

Kafka cluster management is conducted with the help of following four components:

Kafka Config Service

A critical and highly available service that pushes configurations to the Kafka client applications.

Kafka ACLs

PayPal built a platform to have Access Control Lists (ACLs) for Kafka producers and consumers connecting to different topics. With ACLs, it is strictly managed and monitored which applications are accessing which topics. This ensures security in the platform.

PayPal Kafka Libraries

The Kafka platform team also developed various libraries to increase developer productivity.

Client library - for facilitating connection with clusters and sending or receiving messages seamlessly.

Monitoring library - to support generation and publishing of metrics for the client applications that are producing or consuming Kafka topics.

Security library - to authenticate the applications during startup.

The good thing about all these libraries is that they are supported in multiple programming languages, like Java, Go, Python.

Kafka QA Clusters

The team also built QA clusters for testing. It enables developers to easily test their changes in production-like environments, which also ensures quality of the development effort and less room for mistakes.

Monitoring and Alerting

The second aspect of operating Kafka at Scale at PayPal is to have proper monitoring and alerting mechanisms in place. Kafka itself has a lot of in-built metrics which were fine-tuned by the platform team to improve monitoring and alerting as a whole.

Configuration Management System

The third aspect of the platform is the use of the in-house configuration management system. Metadata related to clusters, brokers, topics, applications, etc. are stored in this system. This system acts as a source of truth for all metadata of various components of the Kafka platform. In case of disaster, the data is used for recovering the clusters.

Enhancements and Automation

There are some automations in place to enable seamless integrations across the platform. For example, onboarding a new topic.

Teams submit requests for new Kafka topics on the Kafka Onboarding UI. After the request is approved, a create topic request is sent to the Kafka Control Plane, which creates the topic and ACL in the Kafka platform.

The platform also has support for different enhancements, like security patches or optimisation for repartitioning.

Key Takeaways

So what are the key takeaways from the effort? The authors mention some learnings for operating Kafka at scale:

Tooling is crucial. Have necessary tools and automations in place.

Alerting and monitoring are critical for the success of such a system.

Have well-defined access control.

Benchmark the performance of the clusters in different environments (on-premise vs public cloud).

And that marks the end of the today’s episode! Don’t forget to read them through. As I always emphasise - reading engineering blogs will help you grow as an engineer and increase your breadth of knowledge of the vast field of software engineering. Use them for your advantage!

Till the next episode, goodbye! ✌️

Reply