- Biweekly Engineering

- Posts

- Leveraging Graphs for Fraud Detection | Biweekly Engineering - Episode 35

Leveraging Graphs for Fraud Detection | Biweekly Engineering - Episode 35

Fraudsters are hard nuts to crack!

Fraudulent behaviour is a recurrent phenomenon in consumer-facing websites like Amazon, Uber, or Booking.com.

The more a business grows, the more critical it turns out to be to combat frauds. And guess what, fraudsters keep pushing their limits by coming up with innovative techniques! Likewise, companies have to keep innovating to fight back to shield themselves from losing money. 🛡️

Today, we learn how Booking.com leverages graph technologies to build a fraud prevention platform.

Apart from that, we have featured Github’s take on what developers should know about Generative AI—the hot cake of tech industry today! 🍰

And hey, welcome to the 35th episode of Biweekly Engineering! I know I have not been particularly regular with the episodes, but 35 sounds like a lot! Looking forward to the 50th episode. :)

June 2022 - A gloomy afternoon in Bruges, Belgium

How Booking.com Leverages Graphs for Fraud Detection

Interconnected Nature of Fraud Attacks

A critical insight driving Booking.com’s fraud prevention efforts is the interconnected nature of fraudulent activities.

Fraudsters often operate through linked actors, identifiers, and requests, where patterns emerge through shared data points. For example, a previously flagged email address could be used for further attacks or someone linked to that email address could be a potential fraudster.

To efficiently map these connections, Booking.com has leveraged graphs—a mathematical framework ideal for representing linked data.

This technology allows the team to quickly identify fraud links, uncover hidden relationships, and take immediate actions to block suspicious activities before there is significant damage.

Leveraging Graphs

The key idea is to think of fraudulent behaviours as graphs.

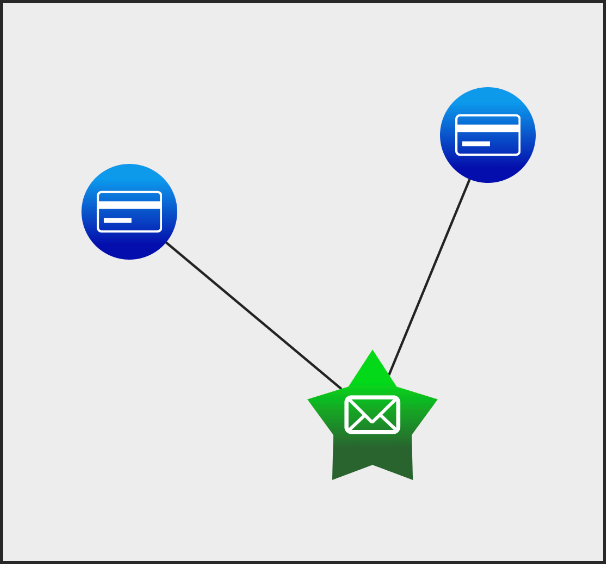

Booking.com uses historical data to build a graph where nodes represent transaction identifiers like account numbers or credit card details, and edges connect identifiers that have appeared together.

This graph data is stored in a graph database—in this case, JanusGraph.

When assessing fraud risk, the system queries the graph to create a localized view around the request, helping to identify potential fraud based on previous connections.

For example, imagine a reservation request where the credit card used is linked to multiple accounts in past transactions. By querying the graph database, the system can quickly retrieve a local graph that shows all these connected accounts, along with any shared identifiers such as IP addresses or device fingerprints.

Now, if the same credit card has been used across accounts with prior fraud history, or if other identifiers in the network raise red flags, the system can flag the transaction as high risk for further investigation or automated action.

The article shares a detailed example of how a graph is built. Make sure to check it out.

Note that the graph structure and the database is part of a platform that detects frauds for Booking.com. Only querying the database and finding a link doesn’t detect frauds reliably and correctly. There are machine learning models involved—as you can imagine.

Github’s Take: What You Should Know about Generative AI

Generative AI has taken the world by storm.

Generative AI, more specifically LLM, has become the new shiny technology that everyone is trying to use to solve their problems. My manager recently put it nicely—

Generative AI is a solution that’s looking for problems!

While it’s alluring to solve problems using generative AI, not everyone of us is an AI or ML engineer. And we don’t need to be. Having a high-level idea of the technology can be a good start for most of the developers.

That’s what we get to know from Github's article today.

Use Cases of Generative AI

Generative AI now has a wide range of use cases. It contrasts traditional AI, which identifies patterns to perform specific tasks whereas generative AI creates new outputs based on natural language prompts. Key use cases include:

Text generation: With roots in AI research from the 1970s, text generation has evolved with models like GPT, which mimic human-like responses based on extensive training data.

Image generation: AI tools can generate images from text, with applications like Stable Diffusion gaining popularity for creating visuals that match descriptive prompts.

Video generation: By manipulating existing videos with styles or prompts, tools like Stable Diffusion are advancing video creation, exemplified by projects such as stable-diffusion-videos on GitHub.

Code generation: Tools like GitHub Copilot generate programming code, offer code suggestions, or translate code between languages, streamlining software development.

Data generation: Generating synthetic data, often used by self-driving car companies, helps enhance machine learning models without relying on real user data, offering privacy advantages.

Language translation: Combining natural-language understanding (NLU) with generative AI, these tools provide real-time translation of human or programming languages, expanding accessibility.

How Generative AI Works

The article delves into how generative AI models use neural networks to create new content by identifying patterns in data. Neural networks, modeled after the human brain's neurons, form the basis of machine learning and deep learning, enabling AI systems to process vast amounts of data such as text, code, or images. These models are trained by adjusting their parameters to minimize errors, improving accuracy through continuous learning.

Generative AI relies heavily on algorithms, which not only enable machines to learn from data but also optimize outputs and make informed decisions. However, building generative AI models is complex due to the enormous data and computational resources required.

Several types of generative AI models are discussed in the article:

Large Language Models (LLMs): These models process and generate natural language text, trained on vast datasets like books and websites. They have broad applications in virtual assistants, chatbots, and text generation (e.g., ChatGPT).

Generative Adversarial Networks (GANs): GANs use two neural networks—a generator that creates data and a discriminator that evaluates it. The adversarial process between these networks improves data quality until the generated content is indistinguishable from real data.

Transformer-based Models: These models excel at understanding sequential data, making them highly effective in natural language processing tasks such as translation and question-answering. GPT-4 is an example.

Variational Autoencoders (VAEs): VAEs compress data into smaller representations and generate new content similar to the original. They’re commonly used in image, video, and audio generation.

The above (and also the article) is a very much hand-wavy explanation of how gen AI works. If you are curious, I would recommend to do your own research!

That marks the end for today. Hope you enjoyed and learned something new. Until the next one, adios! 🖖

Reply