- Biweekly Engineering

- Posts

- The Case Against Multi-Agents | Biweekly Engineering - Episode 42

The Case Against Multi-Agents | Biweekly Engineering - Episode 42

Why Cognition thinks multi-agent should be avoided

If you have been in touch with the developments in AI, you probably heard from AI-evangelists that 2025 would be the year of AI agents. And multiple agents would collaborate to accomplish tasks that historically require humans.

Tons of startup were founded, VC money flooded the streets, every John and Rebecca thought AI agents would replace half the jobs. Journalists wrote articles after articles explaining how bleak the future is.

Guess what, 2025 didn’t become the year for AI agents. As Andrej Karpathy said, we are probably now in the decade of AI agents. There is a long way to go.

AI agents haven’t been able to live up to the hype yet, let alone multi-agents. Today, Biweekly Engineering features an article from Cognition on their case against multi-agents.

On the top of Innsbruck, Austria

What is an AI agent?

Let’s start with a bit of a refresher - what is an AI agent?

An AI agent is a system designed to perceive its environment, reason about it, and take actions toward a goal. It does so often autonomously or semi-autonomously.

In practice, that definition can mean very different things depending on context - from a chatbot that helps answer emails to a software system that plans and executes complex workflows using tools or APIs.

More formally, an AI agent is a program that can:

Observe the world (through inputs like text, data, or API results).

Decide what to do next (using reasoning or a language model).

Act on that decision (by producing output or calling a tool).

Learn or adapt over time (sometimes by remembering past actions or results).

An awesome example of a successful AI agent is Claude Code (or similar tools).

What are multi-agents?

Now we can easily understand multi-agents.

A multi-agent system is a setup where multiple AI agents work together to solve a task.

Each agent has its own role, reasoning loop, and context, and they communicate or coordinate to reach a shared goal.

In theory, this allows parallel work and specialization — for example, one agent plans, another executes, and another reviews.

But that’s in theory. Is it really a good idea to build multi-agents, yet? This is where I find the blog post from Cognition pretty interesting. Let’s dive in.

The case against multi-agents

The blog post offers arguments against the current fashion for multi-agent architectures. Instead of celebrating parallelism and complexity, it asks a more fundamental question: how do we build systems that think coherently over time?

The answer, as the authors suggest, lies not in multiplying agents but in mastering context.

The shift towards context engineering

The article opens with a comparison to the early web. Back then, developers had all the raw materials: HTML, CSS, JavaScript, but no consistent way to organize them.

Frameworks like React or Rails eventually emerged because they offered more than tools - they gave developers a philosophy of structure. Cognition believes AI systems are at the same stage now.

This is where they introduce the idea of context engineering, which they position as the next step beyond prompt engineering.

Prompt engineering is about crafting good instructions. On the other hand, context engineering is about managing what the model knows at each point in time.

It’s an architectural discipline. It asks: how is information remembered, how is it passed forward, and how does the model interpret its own history?

By framing things this way, Cognition argues that reliable AI behavior depends not on clever wording but on the thoughtful management of information flow.

Why multi-agents system goes wrong

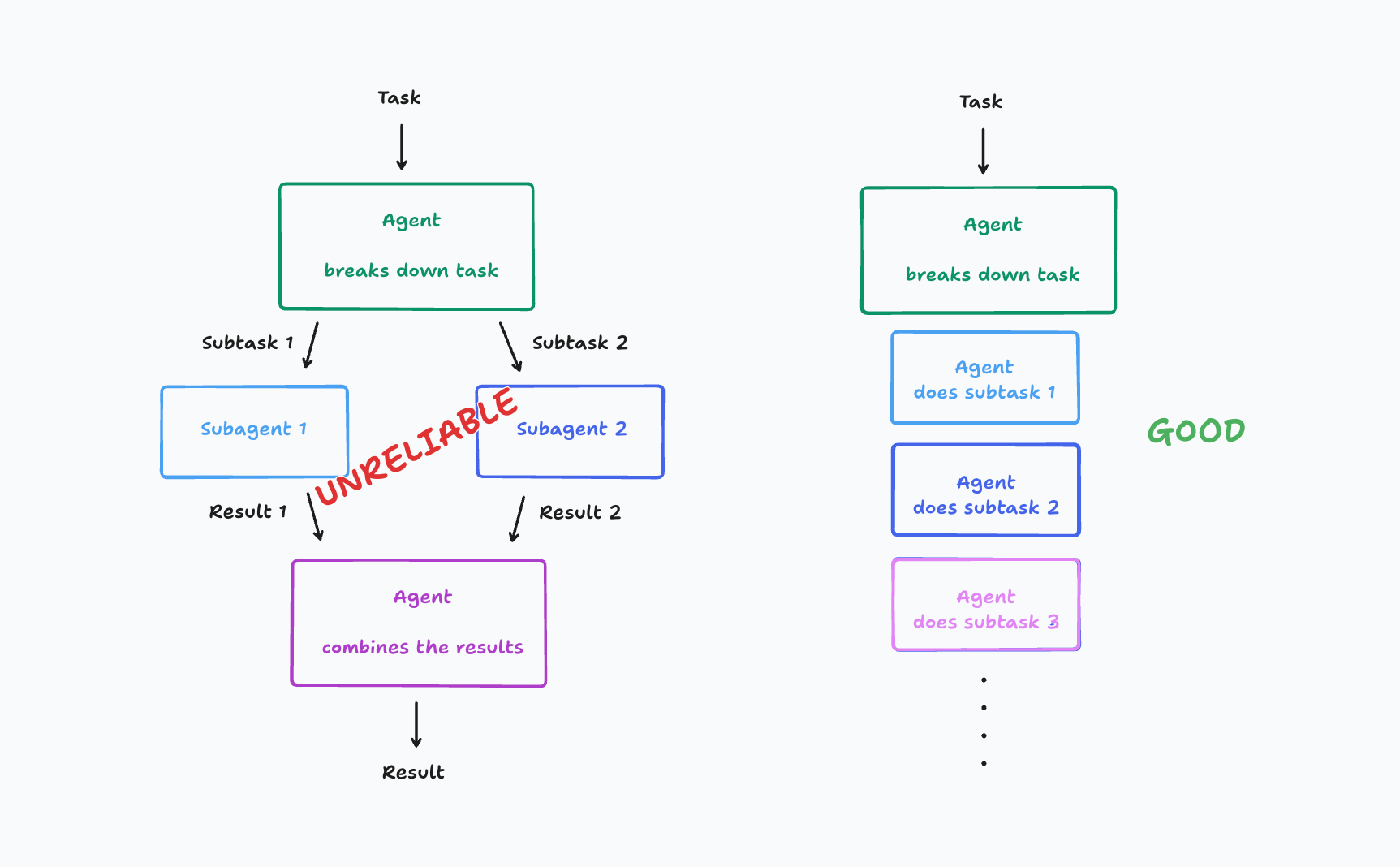

The post takes aim at the now-popular idea of creating teams of AI agents that collaborate on tasks. The usual pattern is familiar: a “manager” agent breaks a goal into subtasks and assigns each one to a specialized “worker” agent.

It looks elegant in diagrams, but the authors show how quickly it falls apart. Each sub-agent builds its own assumptions about what matters, leading to inconsistencies in both reasoning and output.

Cognition uses a simple example: tell one agent to design the background for a Flappy Bird clone and another to build the bird itself. Without shared context, you might end up with a Mario-style world and a photorealistic bird. Both parts are competent, but the combination is incoherent.

The problem is the fragmented context. Each agent makes decisions in isolation. The article distills this into two principles that I found quite interesting:

Share context and traces - every agent needs visibility into the full conversation and decision history, not just its immediate prompt.

Actions imply decisions - every step taken is a choice that shapes what follows. When separate agents make conflicting choices, the system starts to derail from its goal.

The authors conclude that architectures violating these principles should be ruled out from the start. Once context and decision logic are fragmented, reliability is nearly impossible to recover.

Simpler architectures work

Instead of assembling fleets of agents, Cognition recommends simpler, linear designs. One main agent should own the full context and execute tasks sequentially. When the context window grows too long, use compression or summarization, but keep a single, continuous thread of reasoning.

I think, for now, it’s the pragmatic approach.

In most real systems, reliability matters more than parallelism. Linear agents are easier to debug, trace, and reason about. They might be slower, but they fail in predictable ways and can be improved iteratively.

Put the principles at work

To illustrate these ideas, the post looks at real systems.

Claude Code, for instance, occasionally delegates subtasks, but everything happens in one linear thread. The context is preserved throughout, so the model never loses sight of the bigger picture.

By contrast, “edit-apply” systems, where one model writes instructions and another executes them, tend to misfire. The executor interprets instructions differently, and small misunderstandings multiply into errors.

The takeaway is, every agent hand-off is an opportunity for context loss. Until we have robust ways to synchronize context between models, multi-agent architectures will remain fragile.

That’s all for today. It was a super interesting read on cutting edge technologies in the industry!

I am aware the frequency of the episodes is not ideal. But things have become super busy for me! Still, I am hoping to continue the newsletter in lower frequency.

Hopefully, the next one comes soon enough. Until then, keep pushing yourself to learn more! 🏇

Reply